Digital Twin Showcase: Overcoming Challenges by Working Together

Marketing

Introduction

In recent years, digital twins have begun to revolutionise many areas of project planning, asset management, and even environmental policy. Yet, to realise the full potential of a holistic, digital environment, effort must continue to sustain growing collaboration, data standards, and ultimately trust in an expanding industry.

Digital twins virtually represent the functionality of a physical system, asset, or component. They exist through the provision of accurate, timely, and relevant data about the state of the real world.

In all but the simplest systems, these data feeds are sourced from a range of different data suppliers and technology platforms and sensors, often in a variety of data formats. Ensuring that each of these data sources work together is a significant task that demands as much from the technology as it does from the disparate operators to collaborate to an agreed standard.

These socio-technical challenges will be key in defining the long-term impact of digital twin technology, so it is important that any interested industry address these issues as soon as possible, including the earth observation sector.

The Catapult looks to the established digital twin industry in the built environment to demonstrate how cross-sector collaboration and leadership can guarantee the effectiveness of a digital twin.

The Digital Twin Hub – born out of the Centre for Digital Built Britain and now run out of the Connected Places Catapult – has created the Gemini Principles, guidelines identifying three clear requirements to embrace when embarking on a digital twin project to ensure its sustainability and long term viability:

- Have a clear purpose,

- Be trustworthy,

- Function effectively.

In this blog, we will discuss why each of these principles are critical to success and look to see how they are being applied in the earth observation community.

Purpose

Digital twins are a means to an end, they require a clear purpose which clearly describes an impact objective which is not technologically specific, but to which a digital twin-type approach would contribute value. In other words, what asset or system will your digital twin represent, who is it for, and which decisions does it help facilitate?

Importantly, a digital twin doesn’t need to mirror everything about the original system. It is easy to imagine that a digital twin’s purpose is to exactly replicate its physical counterpart, however, this realistic representation of every feature it is not necessary or recommended. Instead, a digital twin should produce relevant abstractions of the physical asset, where the aim is to develop a virtual environment that is fit for purpose, with a level of fidelity that depends only on the primary use case.

Therefore, understanding this purpose allows the developers to identify the right sources of data, define the appropriate monitoring frequency, and better develop accurate models which are not biased by unnecessary parameters.

Value Creation and Insight

The fundamental purpose of any digital twin is to improve the whole-life performance of a given asset. Performance can be defined in multiple ways, with many users looking for financial improvements based on return on investment (ROI) of developing a digital twin infrastructure

Other improvements include:

- Environmental impact – optimising energy consumption to reduce the carbon footprint of an asset or service or improving biodiversity.

- Social benefits – providing optimal working or living conditions.

- Safety and Security – effective prediction and monitoring of hazardous situations to prevent potential harm to users, communities, and the environment.

Many of these benefits will also provide a financial benefit in addition to their primary purpose.

Trust

For a digital twin to be useful, both the users and data providers need to have trust in the system. Users need to know that the outputs it provides are accurate, while developers and data providers need to feel secure that their data is protected against malicious attacks.

Security

In the digital world, data is a rich commodity. Like any commodity, data must be protected from potential threats, meaning security measures are a paramount consideration when designing digital systems.

Compromises in data security can have devastating effects. Illicit access can damage data integrity, reducing its worth or usefulness to the business. These intrusions can risk non-compliance with privacy regulations (e.g., GDPR), causing potential financial or reputational damage. Impaired digital twin systems can impact functionality, creating risks to productivity and maintenance.

As the digital twin sector grows and platforms shared by multiple organisations start to proliferate, ensuring separation between organisations’ confidential data hosted on the same platform will be a priority for software providers to ensure and to evidence.

Quality

Data quality is integral to the primary role of a digital twin, that is, making accurate, informed decisions.

How do you measure data quality? The data quality assessment framework (DQAF) identified six key objectives, including:

- Completeness – Are there any gaps in the dataset (e.g., are there missing dates)?

- Uniqueness – Do these data exist elsewhere, is this the best source of these data?

- Consistency – Are changes in data due to observed events, or changes in the methodology?

- Timeliness – How up to date are the data, are they received in time for action?

- Validity – Do the data conform to the standards required of it (e.g., format)?

- Accuracy – How well do the data reflect real life?

To maintain the fidelity of the digital twin, users must ensure quality across more than just datasets. Good data analytics and management processes are vital to maintaining the consistency, reproducibility, and long-term quality of digital twin outputs. Where possible, manual data handling should be replaced with automated processes, with robust validation protocols.

The best way to ensure data quality across a digital twin project is to set out clear data governance from the beginning, including data architecture, with clearly defined responsibilities and explanations on how data is stored, indexed, accessed, and updated.

Function

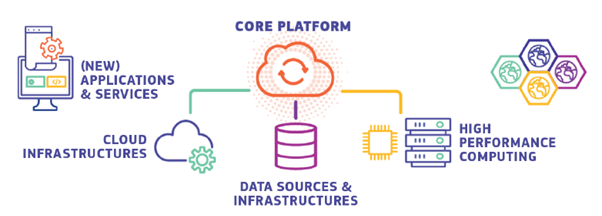

Developing a functional digital twin is a significant challenge. Relevant data must be sourced, predictive models need to be integrated, and a core platform must be developed that can assimilate diverse data, allow user interaction, and generate clear and intuitive information (e.g., Fig.1).

Figure 1: Description of Destination Earth (DestinE) core platform. Source: European Commission

Data

Good data curation is critical in knowledge discovery and innovation because if you cannot access the data, it serves no one.

While many sources of data exist (both observed and modelled), their effectiveness is largely defined by how easily they are integrated into a system. Governance on data curation and storage is critical – including policies on data retention and the avoidance of unnecessary duplication of data.

The FAIR guiding principles for scientific data management were established in 2016. Published in Scientific Data journal in 2016, the authors provide a framework to improve the Findability, Accessibility, Interoperability, and Reusability (FAIR) of data.

FAIR principles set a standard for data management, which includes clear metadata to describe the contents of each data product, ensuring the data are understood by the user and can be interpreted by all systems and cataloguing services.

Cloud Computing

Cloud computing is revolutionising the way businesses work, moving away from self-managed datacentres to cost-effective remote based computing, where software, data processing and storage are accessed through the internet. Digital twins are ideal candidates to take advantage of cloud computing through the consumption of data feeds from remote monitoring platforms.

While cloud-based systems solve many data handling issues, a functional digital twin still requires certain standardisations in areas such as software design to work effectively. Whether it’s through the use of a common database system, or prescribing set coding languages, standardising systems and workflows will promote greater uptake by the users and increase the reusability of software and algorithms.

As a digital twin matures, it will likely acquire greater functionality, with a larger and more diverse user base. Cloud systems, such as Amazon Web Services or Microsoft Azure provide scalability, where changes in the number of users and services are instantly accounted for by the purchase of more remote computing resources.

Earth Observation

In the Earth Observation (EO) industry, accessing data and standardising data processing pipelines have historically been an issue. However, recent innovations and applications of FAIR principles are greatly improving the applicability of EO data to digital twins, with regularly updated datasets starting to be available for real-time ingestion into live systems.

Freely available public EO data (e.g., Copernicus Sentinels) and commercial EO suppliers (e.g., MAXAR) produce a wide range of data products, with suppliers based all over the world. Each provider typically has a unique distribution method, while products are provided in a range of data formats, with unique metadata to describe their contents.

Cloud-based high-performance geospatial software-as-a-service platforms such as Google Earth Engine and Planetary Computer have recently centralised many public EO products. These efficient innovations provide archives of geo-located, analysis ready data (ARD), in easy-to-use Cloud-Optimised GeoTiffs, or COGs.

Importantly, users can make significant savings in time and money, by removing the need to acquire, store, process and maintain these data. Instead, data can be analysed and combined with other geospatial data using cloud systems.

However, very high-resolution data typically comes at a cost and does not frequently appear on these cloud-based systems. But, finding these data is becoming simpler, through the use of interactive online portals where data can be acquired either on a transactional, subscription or annual/perpetual licence.

The growing uptake of the Spatio Temporal Asset Catalog (or STAC) standard – which provides a common metadata framework to index, store and share earth observation data- has enabled data provisioning platforms to more easily adhere to FAIR principles.

Closing – Working better, together

Socio-technical is an appropriate term for the challenges faced when running a digital twin project. While the technical challenges are apparent, the task of disparate, unrelated groups working collectively toward a common goal should not be underestimated.

The Gemini Principles were developed in conjunction with the national digital twin (NDT) program – an ecosystem of independent digital twins, connected in a federated system through the sharing of data.

To facilitate collective working amongst a range of users, the Gemini Principles provide a consensus on information management within a digital twin framework. As such, they bring economic benefits to interested parties, through the sharing of resources in data, storage, and energy. Ultimately, these symbiotic partnerships accelerate understanding for all parties, provide greater insight for decision making and reduce associated costs.

Examples of connected twins could include energy, transport, and water facilities, each developed and operated independently yet benefit from the sharing of information and data – e.g., modelling the expected impact on the energy grid, or disruption to the transport system through the implementation of a new wastewater treatment facility.

These Gemini Principles are appropriate for all types of digital twin, federated or isolated; as it both optimises the curation of functional data and provides the capability for future assimilation into a federated ecosystem.

Ultimately, they describe the need for disparate stakeholders to work together to define the purpose of the twin, and to build trust through sharing of quality and timely data which follow agreed standards in data governance. Together, these guidelines define the effective functionality of a digital twin project.

Call-to-Action

The final article in our series on Digital Twins will examine the opportunities to industry presented by the burgeoning digital twin landscape in data provision and product commercialisation, analysis platform hosting, hardware for sensing and data collection – with a focus on those affecting the earth observation sector.

Talk to us at the Satellite Applications Catapult if you are looking for guidance as to where to start with the use of EO data and technology in monitoring your assets and developments – and if you have an idea of where you could step in within the data pipeline.